OpenAI recently updated its ChatGPT API with this function call capability. In short, it lets the user to tell the GPT model that there are one or more available function calls that ChatGPT can leverage.

Conceptually, for example, when the user asks for today’s weather and describe an available API to query weather information, the AI model would try to call the given API to get the weather. While in practice, the AI model has no capability to direct invoke the function call, instead it specified which function it is about to invoke and the arguments to that function. The user can then invokes the function locally.

It seems to be a really powerful capability and makes the AI model look more like a general-purpose agent. Imagine what the AI model can do if one supplies a long list of available functions to the GPT model.

My first reaction to this news is to re-evaluate how GPT works on the sequence addition question.

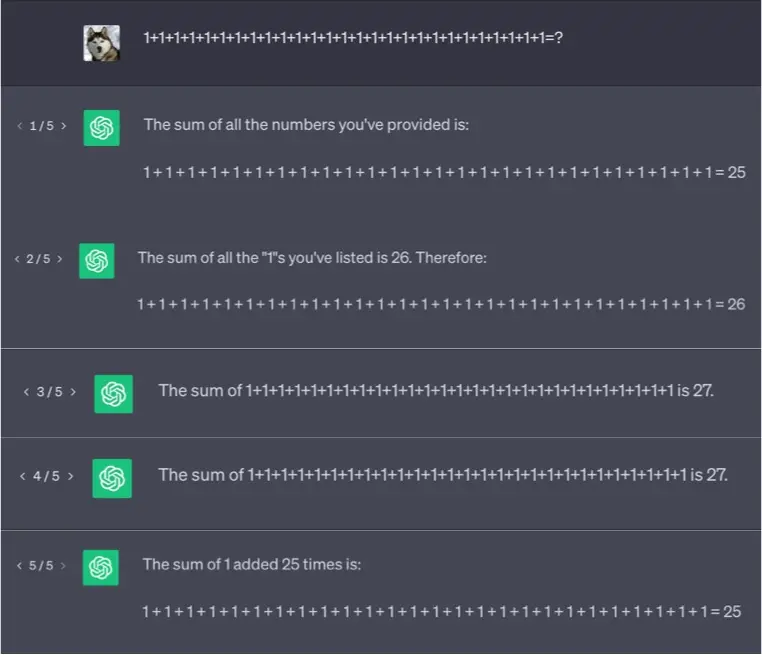

The question is to sum up 27 1s. ChatGPT failed on this question that 3 out 5 times, it gives the wrong answer.

While the function call feature may not be designed for this specific use case, I am curious whether or not the function calling could be helpful here.

The implementation is quite straightforward. The basic version is a single round conversational request.

messages = [

{"role": "user", "content": "2+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1=?"}

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=messages

)

print (response["choices"][0]["message"])

Due to randomness, the accuracy is 60% given limited samples.

# Question:

{'role': 'user', 'content': '1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1=?'}

# Answer:

{

"role": "assistant",

"content": "The sum of all the 1s is 27."

}

{

"role": "assistant",

"content": "The sum of the given numbers is 27."

}

{

"role": "assistant",

"content": "The sum of all the 1's is 27."

}

{

"role": "assistant",

"content": "The sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 28."

}

{

"role": "assistant",

"content": "The sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 28."

}

On top of this, a function used to calculate sum is described and supplied in the API. ChatGPT model will specify whether it needs to call the function or not.

If it did, we can locally invoke the function and feed the return value back to the AI model in the next round of conversation.

def get_sum(inputs):

return sum(inputs)

functions = [

{

"name": "get_sum",

"description": "Get the sum of a list of numbers",

"parameters": {

"type": "object",

"properties": {

"inputs": {

"type": "array",

"description": "the list of numbers to be summed up",

"items" : {"type": "number"}

}

},

"required": ["inputs"],

},

},

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=messages,

functions=functions,

function_call="auto"

)

response_message = response["choices"][0]["message"]

if response_message.get("function_call"):

available_functions = { "get_sum": get_sum }

function_name = response_message["function_call"]["name"]

fuction_to_call = available_functions[function_name]

function_args = json.loads(response_message["function_call"]["arguments"])

function_response = fuction_to_call( inputs=function_args.get("inputs") )

messages.append(response_message)

messages.append(

{

"role": "function",

"name": function_name,

"content": str(function_response),

}

)

second_response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=messages,

)

response_message = second_response["choices"][0]["message"]

print (response_message)

Unfortunately, the accuracy is still 60% given 5 tries.

# Question:

{'role': 'user', 'content': '1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1=?'}

# Answer:

{

"role": "assistant",

"content": "The sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 27."

}

{

"role": "assistant",

"content": "The sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 29."

}

{

"role": "assistant",

"content": "The sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 27."

}

{

"role": "assistant",

"content": "1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 28"

}

{

"role": "assistant",

"content": "The sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 27."

}

It seems to be obvious now that the AI model gets it wrong because it failed in counting but not summing up. It should perform better with some guidance.

I asked:

“1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1=?, count the number of numbers first and then calculate the sum”

The results are surprisingly good that 5 out 5 times it is correct, the accuracy is 100%!

{

"role": "assistant",

"content": "There are 27 numbers to be added together. \nThe sum of all these numbers is 27."

}

{

"role": "assistant",

"content": "The number of numbers is 27, because we have listed 27 repetitions of \"1\".\nNow, we can calculate the sum: 27 * 1 = 27.\nThus, the sum of 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 27."

}

{

"role": "assistant",

"content": "There are 27 numbers. Now, let's calculate the sum:\n\n1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 27"

}

{

"role": "assistant",

"content": "The number of numbers is 27. \nThe sum of these numbers is 27."

}

{

"role": "assistant",

"content": "There are 27 numbers to add.\n\n1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 27\n\nSo, the sum is 27."

}

To convince myself that the trick works, I increased the number of retries to 50 and the model still performs pretty well that 49 out of 50 times, it gives correct answer. It is not perfect but I guess one can add more tricks to improve it further.

I also noticed that for this specific case, supplying a function call is a distraction to the GPT model. Given the above question and the “get_sum” function, the responses over 5 retries are as following:

{

"role": "assistant",

"content": "The sum of the numbers 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 27."

}

{

"role": "assistant",

"content": "The sum of the numbers 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 28."

}

{

"role": "assistant",

"content": "The sum of the numbers 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 28."

}

{

"role": "assistant",

"content": "The sum of the numbers 1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 is 28."

}

{

"role": "assistant",

"content": "The sum of all 1s is 28."

}

I think there may be a better way to leverage function calls here because after all AI model was not weak at summing up.

When I was doing this experiment, at the moment, I thought I’ve solved the problem with a nice prompt engineering technique. Until I made some twists to the question, I realized that it only works for a certain form.

For example, changing only one number from the addition sequence leads to a catastrophic failure:

# Question:

{"role": "user", "content": "2+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1=?, count the number of numbers first and then calculate the sum"},

# Answer:

{

"role": "assistant",

"content": "The number of numbers in the equation is 27. \n\nAdding all the numbers together, we get:\n\n2 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 27\n\nTherefore, the sum is 27."

}

{

"role": "assistant",

"content": "There are 27 numbers in the equation. \n\nBy adding them up:\n\n2+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1+1 = 27.\n\nSo, the sum is 27."

}

{

"role": "assistant",

"content": "There are a total of 27 numbers in the given sequence. To calculate the sum of these numbers, we add them up:\n\n2 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 27\n\nTherefore, the sum of the 27 numbers is 27."

}

{

"role": "assistant",

"content": "The number of numbers is 27.\n\nTo calculate the sum, we simply add all the numbers together:\n2 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 32.\n\nTherefore, 2 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 32."

}

{

"role": "assistant",

"content": "There are 27 numbers in the given equation. Now we can calculate the sum:\n\n2 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1\n\n= 27\n\nTherefore, the sum of all the numbers is 27."

}

The counting is still correct but summing up is wrong. It feels like the model was mislead by the “counting first” hint. When different numbers in the sequence, it does feel like a misleading hint to count the numbers first. With some additional studies, I think this hypothesis may be right. With the “counting first” prompt, the model works well for the following questions with all same numbers in the sequence.

# Question:

{"role": "user", "content": "2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2=?, count the number of numbers first and then calculate the sum"}

# Answer:

{

"role": "assistant",

"content": "The sum of 2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2 is 56."

}

{

"role": "assistant",

"content": "The sum of 2 added 26 times is 52."

}

{

"role": "assistant",

"content": "The sum of 2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2 is 60."

}

{

"role": "assistant",

"content": "The sum of 2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2 is 56."

}

{

"role": "assistant",

"content": "The sum of 2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2+2 is 56."

}

# Question:

{"role": "user", "content": "3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3=?, count the number of numbers first and then calculate the sum"}

# Answer:

{

"role": "assistant",

"content": "There are 27 numbers in the addition expression. Calculating the sum:\n\n3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 + 3 = 81"

}

{

"role": "assistant",

"content": "There are a total of 27 numbers:\n\n3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3 = 27\n\nNow, calculating the sum:\n\n27 x 3 = 81"

}

{

"role": "assistant",

"content": "There are 27 numbers.\nSum = 27 x 3 = <<27*3=81>>81"

}

{

"role": "assistant",

"content": "There are 27 numbers being added together. \n\nTo calculate the sum, we can multiply 3 by 27: \n\n3 * 27 = 81 \n\nTherefore, the sum of 3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3 is 81."

}

{

"role": "assistant",

"content": "There are 27 numbers in the expression. \n\nTo calculate the sum, we can multiply the number of occurrences (27) by the value of each number (3). \n\n27 x 3 = 81 \n\nSo, 3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3+3 is equal to 81."

}

With the inherent uncertainty of GPT model, I don’t think we can make some certain conclusion here. With all these experiments with limited budget, I would say for this long sequence addition problem, when all the numbers are the same, the “counting first” trick helps.

It is very interesting to play with this model on this type of question, though it is still unclear to me why the model cannot answer this relatively trivial question correctly.

You must be logged in to post a comment.